How my tech job prepared me for an AI Takeover

Here’s what we’ll learn in this article:

1. What we need to know about the Substack data leak

2. How are we being fooled into thinking we need more biometric security?

3. How to read between the lines of cybersecurity protocols

4. How an army of bots is waging identity war on humanity

5. How we're being guilted into using AI

"Wait beside the river long enough, and the bodies of your enemies will float by.”

~ James Clavell, Shōgun*

Gazing down upon the dry creek bed behind our house, I look for signs of water. Instead all I see is its jagged spine of arid rock, twisting, clamoring, to quench its thirst. Currents feeding streams currently feed data centers where hard drives erase the hard lessons of life. Wells of imagination run dry as children trade daydreams for tablets, rowing down a frigid virtual stream where strangers steer their fated vessels into an abyss.

How did we wind up this far down the technological river without a paddle?

In my past life as a computer salesman, we’d advise clients on the costly payment card industry data security standards (PCI-DSS) they needed to install if they wanted to keep running credit cards through the internet on their point-of-sale (POS) system. Many of my customers chose to revert to dial-up, which didn’t have the same stringent security standards. It’s much more difficult to tap a physical landline and capture credit card data than to have an internet connection where you can hack into an IP (internet protocol) address. If a client wanted to keep processing credit cards through the internet, they’d often have to upgrade their POS system’s hardware, which would cost anywhere between $5,000 to $100,000. If you’re selling pizzas, this is a considerable chunk of change.

Side note: Does anyone remember the manual credit card imprinter?

Oh, how I miss the smell of that carbon paper…

Owners had no idea if someone’s credit card would work - but that was the natural intelligence of trust at play.

Why were we pushing for tougher software security? Credit card processors got their marching orders from banks, which would quickly come up with security regulations in response to a crisis. For instance, in 2013 there was a nationwide data breach at Target, as hackers exposed personal and financial information of over 40 million customers. The attackers gained access through compromised credentials belonging to a third-party vendor, resulting in the theft of credit card details, email addresses, and phone numbers. Malicious activity was detected on November 27th but wasn’t disclosed until late December.

In 2015, Home Depot had the payment details of 56 million customers stolen by hackers who had gained access to third-party login credentials. To add insult to injury, both Home Depot and Target were already PCI-DSS compliant, abiding by the rules of credit card processors at the time of the data breach. Banks and merchant service providers still did not hesitate to push for tougher security, even though these upgrades would do little to prevent an attacker from being able to steal, or buy, someone else’s password.

Today not only pizza joints, but all people who use social media are being told that they need to pay a price, which will ultimately be much more than $10,000. Even though biometric and age verification are being implemented on Substack and other social media sites, it seems as though these platforms are becoming even less secure.

Seeing the smokestack forest for the trees

Recently Substack announced a data breach where user accounts, emails, phone numbers and meta data (includes account creation dates, subscription information, and IP addresses) were exposed. The breach initially occurred in October, but it took Substack four months to notice. In a world where enterprise security teams typically measure threat detection in hours, how did this 120-day lag even happen?

Ivan Mehta of Tech Crunch blog was the first to report on the issue:

“It’s not clear what exactly the issue was with its systems, and the scope of the data that was accessed. It’s also not yet known why the company took five months to detect the breach, or if it was contacted by hackers demanding a ransom.

Substack did not say how many users are affected.”

Some speculate the hack occurred due to limitations in the automated threat detection capabilities of Substack. Tech Crunch did reach out to Substack for comment, but to date no one knows how the attack occurred.

Let us take the mindset of online security to its logical end:

What would be the ultimate way to prevent hackers from accessing someone’s information?

What would be even more secure than a biometric facial scan?

A retinal scan?

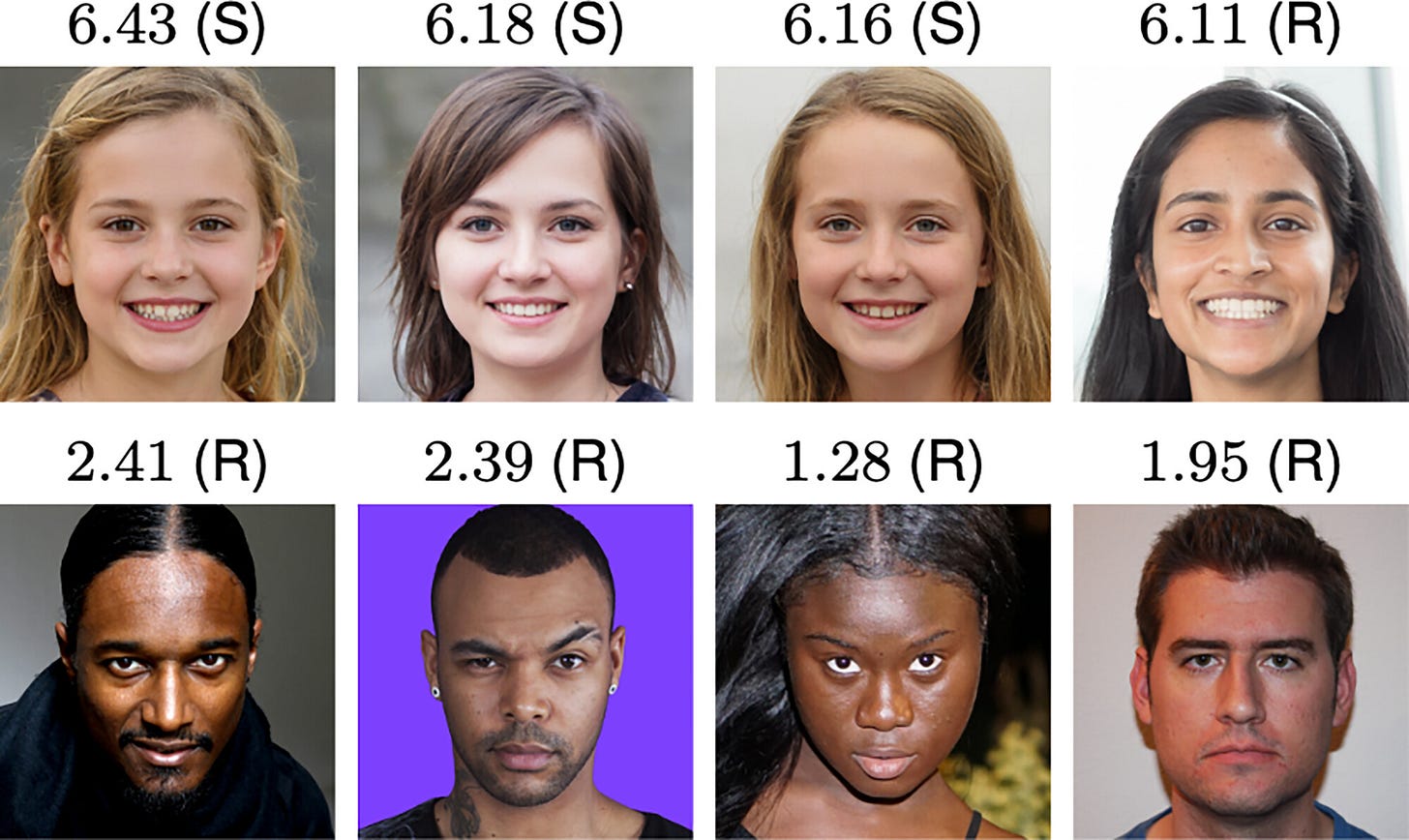

Hackers are already employing what are called injection attacks, where they can write code and fabricate someone else’s identity using data from AI. By leveraging camera emulators and specialized software, hackers simulate live streams, bypassing the need for a physical camera or presence.1

What about a full body scan using the same millimeter wave technology of airports?

This may not be too far-fetched, as Microsoft patents like 2020060606A12 could very easily work with wireless networks by employing ultrawide band (UWB) communications, pinpointing our location to within 30 centimeters.

A hacker could replicate our retina or selfie, but one could argue that reproducing our dynamic movements and bodily activity would be far more difficult. In theory, this form of solitary confinement ironically aims to create the freedom to be free of identity theft. However, if history is to tell us anything, it’s that hackers don’t keep up with technology, they stay ahead of it.

Even though one could argue that prison cells are very safe from the outside world, they still leave you exposed to the general population of other criminals. You won’t get run over by a car when in prison, but if your enemy has keys to your cell, then you are left vulnerable in a bleak forest where no one will hear you shouting.

Grandma wants a fake ID

Cybersecurity executives are sounding the alarm on artificial intelligence and its ability to create fake documents and false personas called deep fakes, which can be audio or video recordings to make it appear as if someone is doing something they didn’t do.

Alarm bells are being sounded online about how ChatGPT is creating fake passports, although these types of headlines are hype.

Although the ChatGPT-fabricated passport likely would not withstand scrutiny due to the absence of an embedded chip, it proved sufficient to bypass the most basic KYC (know-your customers) procedures employed by some fintech services like cryptocurrency exchanges. In response, cybersecurity experts are calling for NFC (near field communication), which enable hardware-level authentication.

Translation: a true passport needs hardware to work at the border, which isn't being currently replicated by ChatGPT:

WANT TO REDUCE EMF IN YOUR LIFE?

Improve sleep, increase focus, and boost metabolism...

Don't live zapped. Live with zest!